I've had this conversation at least 10 times in the past few months:

"How do we handle metering for our AI agents? The token tracking is getting complex, and our costs are all over the place."

My answer quite often surprises people: Metering isn't your problem.

Not because it's technically impossible, but because you’ve not understood what you should be measuring.

The token obsession

I’ve seen this so many times:

- They use token-heavy frameworks

- They spend months building sophisticated token tracking systems

- They create complex cost allocation algorithms

- Sometimes, they obsess over GPU utilization metrics

- They end up having to build real-time consumption dashboards to alert their customers to usage

Then then they wonder why customers hate their pricing and churn after pilots.

Yes, the technical solution was good, but the problem was wrong.

AI metering is much simpler for agents (not harder)

I’ve built a few billing systems, so let me tell you. AI agent metering is fundamentally easier than traditional SaaS or even other AI systems because:

Lower volume, cleaner signals:

- Agents have discrete, countable outcomes (not continuous behavior)

- Agents operate at roughly human-scale frequencies (hundreds of actions, not millions of events)

- Agents tend to have clear success/failure states

- Agents, in some verticals, have obvious attribution (this agent did this task) - for example in customer support

Compare this to traditional AI metering challenges:

- High-volume event streams requiring real-time processing

- Complex user session tracking across devices

- Ambiguous value attribution across features

- Edge cases around partial usage

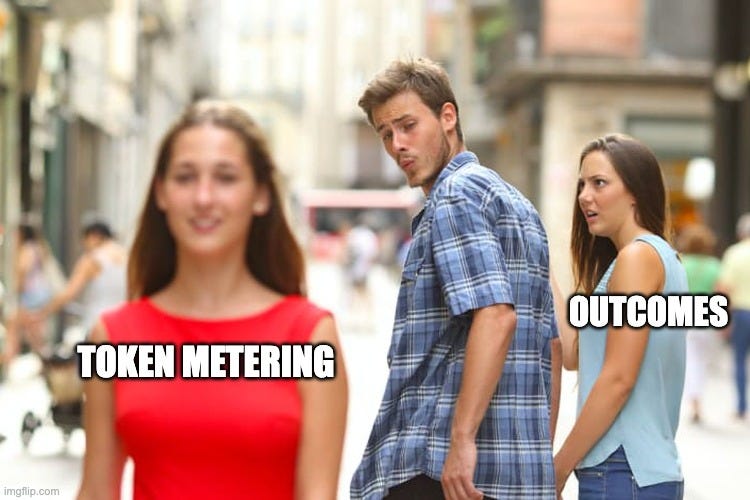

The problem being solved: Input vs. Output confusion

Everyone gets stuck on metering because they’re coming from a mindset that’s copying what they’ve seen with OpenAI or other foundational models.

Sometimes, it’s where consumption correlates with value.

But AI agents are very different.

In SaaS: More usage generally = more value

- More seats = more users getting value

- More storage = more data preserved

- More API calls = more functionality accessed

In AI: Consumption has zero correlation with value

- Email A: 5,000 tokens → Perfect sales email

- Email B: 25,000 tokens → Same quality email (with much longer context window)

Should Email B cost 5x more? Absolutely not.

The Technical Architecture You Actually Need

If metering isn't the hard problem, what is?

Outcome attribution

This is where the real challenge lies, in my opinion.

The hard problems are:

- Defining clear success criteria

- Handling partial successes (do you still charge for part of a workflow?)

- Attribution across multi-step workflows

- Measuring quality and building guardrails

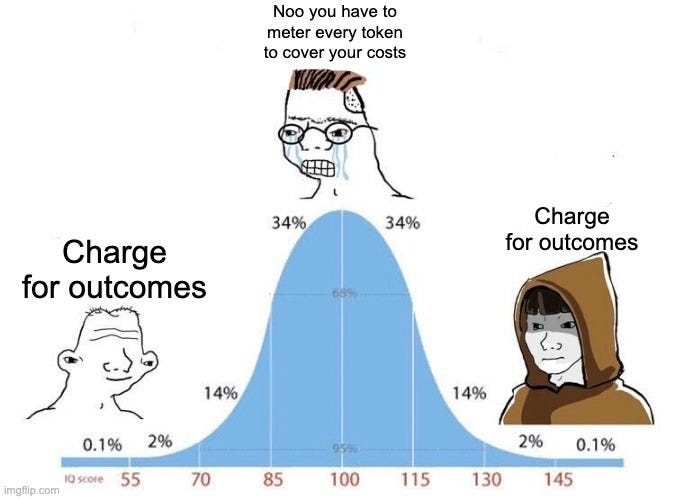

Token counting? That's the easy part.

But that’s why so many companies end up counting tokens. It’s easier.![]()

But what about costs? LLM tokens match up to costs!

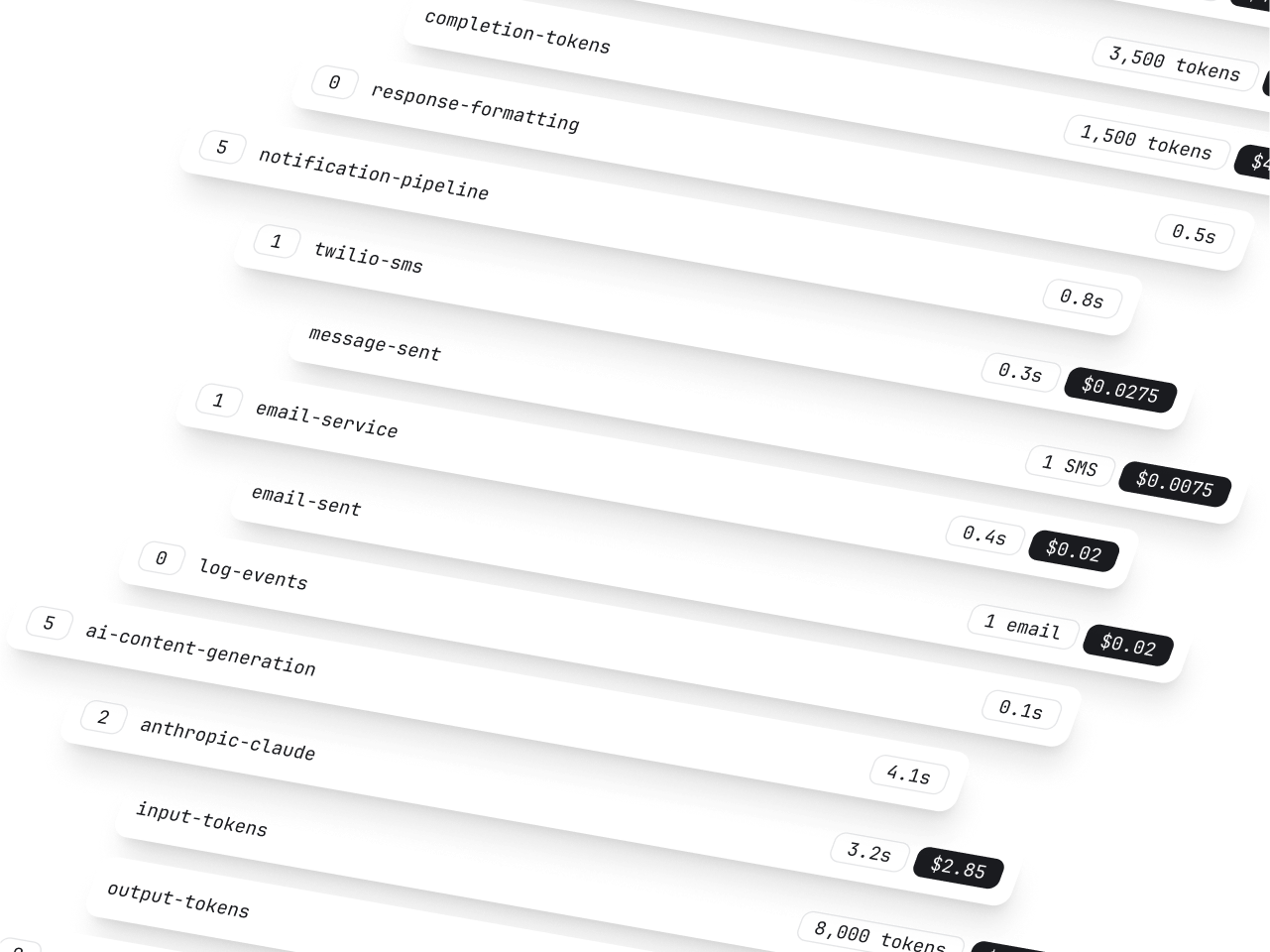

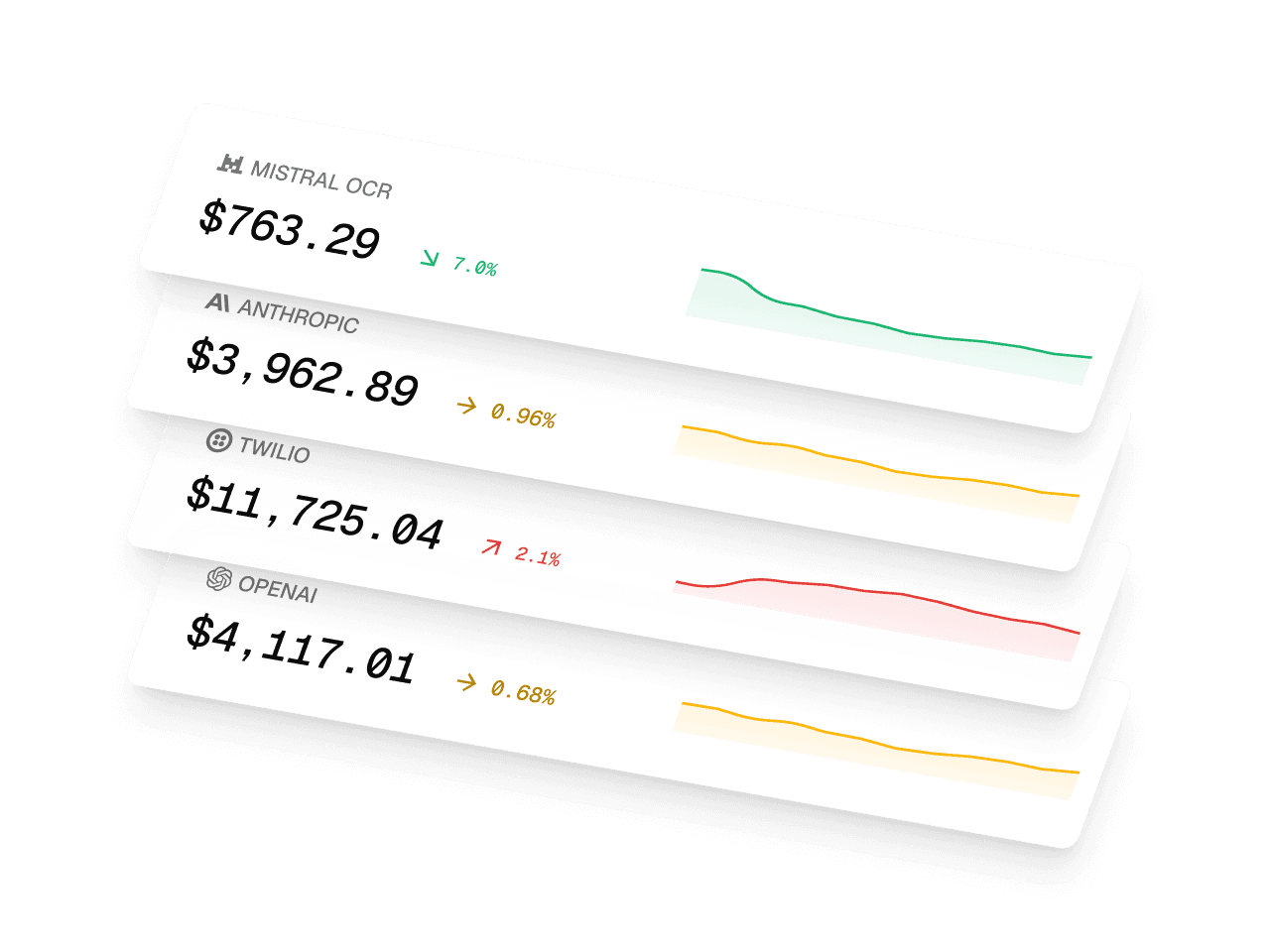

You absolutely should track consumption - for internal optimization, not customer billing.

Paid lets you do both in one go. You can track costs and charge your customer at the same time.

The price of the outcome will be based on a separate pricing you agree with your customers, not directly tied to a token cost.

paid = Paid::Client.new( [...] )

paid.usage.record_usage(

signal: {

agent_id: "agent_1",

event_name: "document_processed",

customer_id: customer_id

data: {

'llm_tokens': 15000,

'llm_cost': 0.045,

'infrastructure_cost': 0.023,

'processing_time': 45.2,

}

}

)

Think of it like a restaurant: You pay $12 for a pizza, while the restaurant tracks labor costs and rent obsessively. But they don't charge you $2.50 for flour + $0.30 for tomatoes + $4.20 for chef time.

The Bottom Line

You shouldn’t build your own elaborate metering systems for AI agents.

Agent behaviour makes it easier than traditional SaaS, but the business model makes it irrelevant to meter.

The companies we see succeed the most spend time on the success criteria, not on real-time token consumption tracking - and definitely not on GPU inference time.

Your customers don't care about your infrastructure costs. They care about the business problems you solve.

Meter for margins. Bill for outcomes!

Stay ahead of AI pricing trends

Get weekly insights on AI monetization, cost optimization, and billing strategies.

Monetize AI Without the Headache

The billing platform built for AI companies. Launch pricing models, track costs, and optimize margins—no engineering lift.

- Track AI costs by model & customer

- Launch usage-based pricing fast

- Know your margin on every deal

- Integrate in minutes