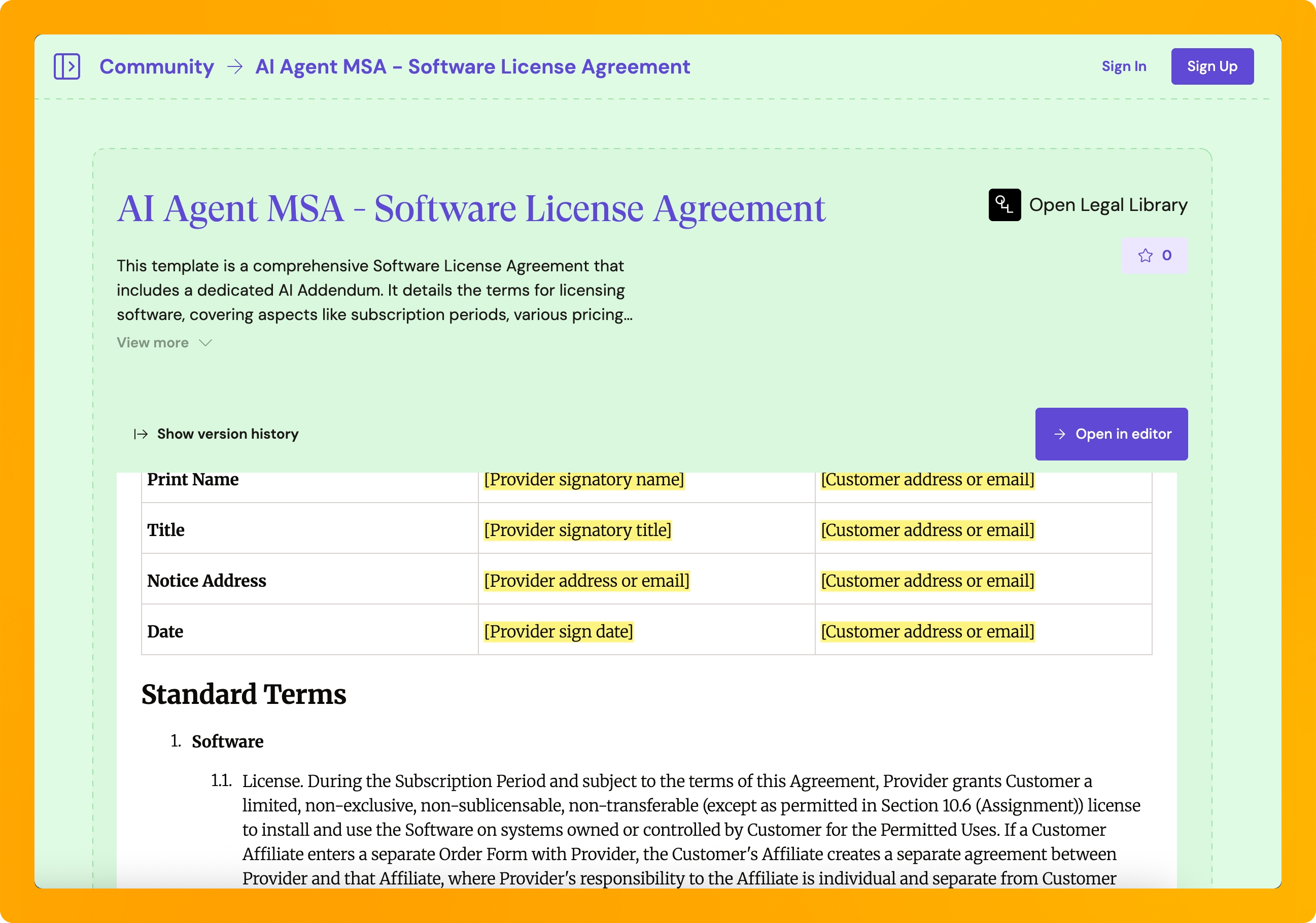

We've partnered with GitLaw to launch something that should have existed from day one: a Master Services Agreement specifically designed for AI agents.

Not because we love legal documents (we don’t), but because the contracts most agent companies are using create problems they don't see until something breaks.

The problem with using SaaS contracts for agents

Most AI agent companies are still using SaaS contracts which makes no sense.

Your software isn't just sitting there helping someone fill out a form. It's booking meetings, writing code, making decisions. When something goes wrong, who's liable?

Standard contracts don't answer that question. They assume software waits for human instructions. Click button, thing happens, done.

But your agent operates differently. It decides which prospects to contact. It writes outreach messages. It follows up based on response patterns. It learns from interactions and adjusts behavior over time.

Those are autonomous actions. And when your agent does something unexpected, the gap between what your contract says and what your product does creates legal exposure you can't price for.

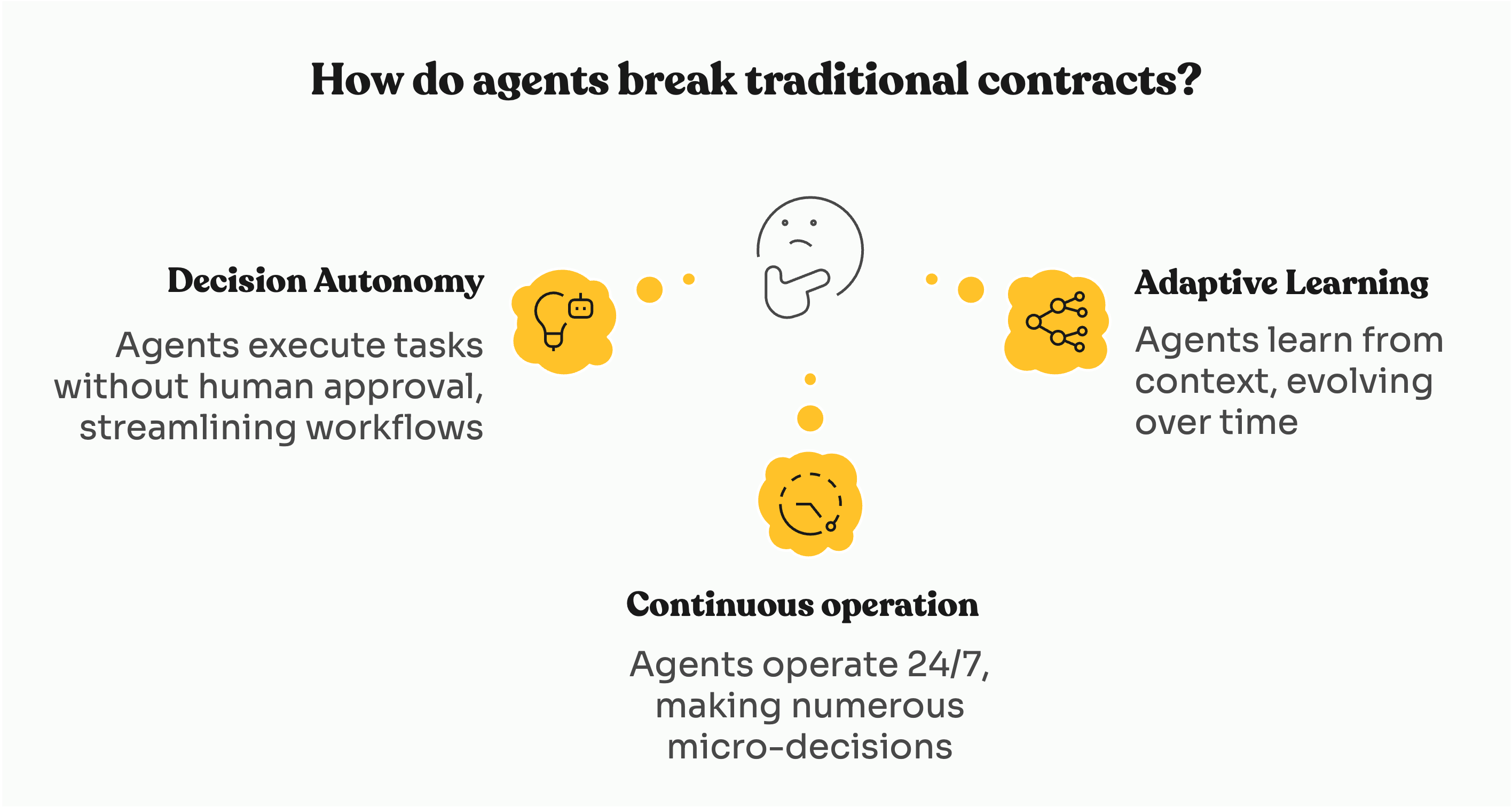

Three ways agents break traditional contracts

Agents make decisions without approval

Your workflow agent doesn't suggest next steps. It executes them. Sends emails. Updates records. Moves data between systems. No human clicking approve at every stage.

Agents act continuously

Traditional software processes tasks one at a time when asked. Agents run 24/7, making hundreds of micro-decisions. Remember the Ford dealership chatbot that hallucinated a free truck offer? That's what happens when autonomous systems operate under contracts written for passive tools.

Agents adapt over time

Static software behaves the same way every deployment. Agents learn from context, adjust to patterns, change behavior based on accumulated data. The system you shipped six months ago operates differently today.

Your SaaS contract wasn't built for any of this.

What the Agentic MSA actually covers

Working with Nick and the GitLaw team, we identified the contract gaps that create the most exposure for agent companies. The new MSA addresses three critical areas:

Agent classification and decision responsibility

The contract establishes that your agent functions as a sophisticated tool, not an autonomous employee. When a customer's agent books 500 meetings with the wrong prospect list, the answer to "who approved that?" cannot be "the AI decided."

It has to be "the customer deployed the agent with these parameters and maintained oversight responsibility."

The MSA includes explicit language in Section 1.2 that protects you from liability for autonomous decisions while clarifying customer responsibility.

Liability limitations and risk allocation

AI agents hallucinate. They produce confident outputs that turn out wrong. The MSA includes explicit disclaimers that agent outputs require human verification before material business decisions.

It also includes damage caps appropriate for unpredictable systems. Typically 12 months of fees with exclusions for indirect losses. Not being difficult. Acknowledging you can't predict every edge case in software that learns and adapts.

Section 7 covers liability limitations with AI-specific disclaimers about output accuracy in Section 4.1.

Data ownership and training rights

This kills more deals than any other contract issue. Your agent ingests customer data and generates outputs. You might want to use those interactions to improve your models.

Customers panic when they hear that. They imagine their proprietary data training models that help competitors.

The MSA establishes that customers own their data and any agent outputs. Then it provides separate, customizable language about using de-identified, aggregated data for training purposes. With clear opt-out options.

Most customers accept training use when it's explained clearly. Trying to slip it in through vague language destroys trust.

Section 2.1 covers ownership with customizable training permissions in the cover page variables.

Why this matters for agent monetization

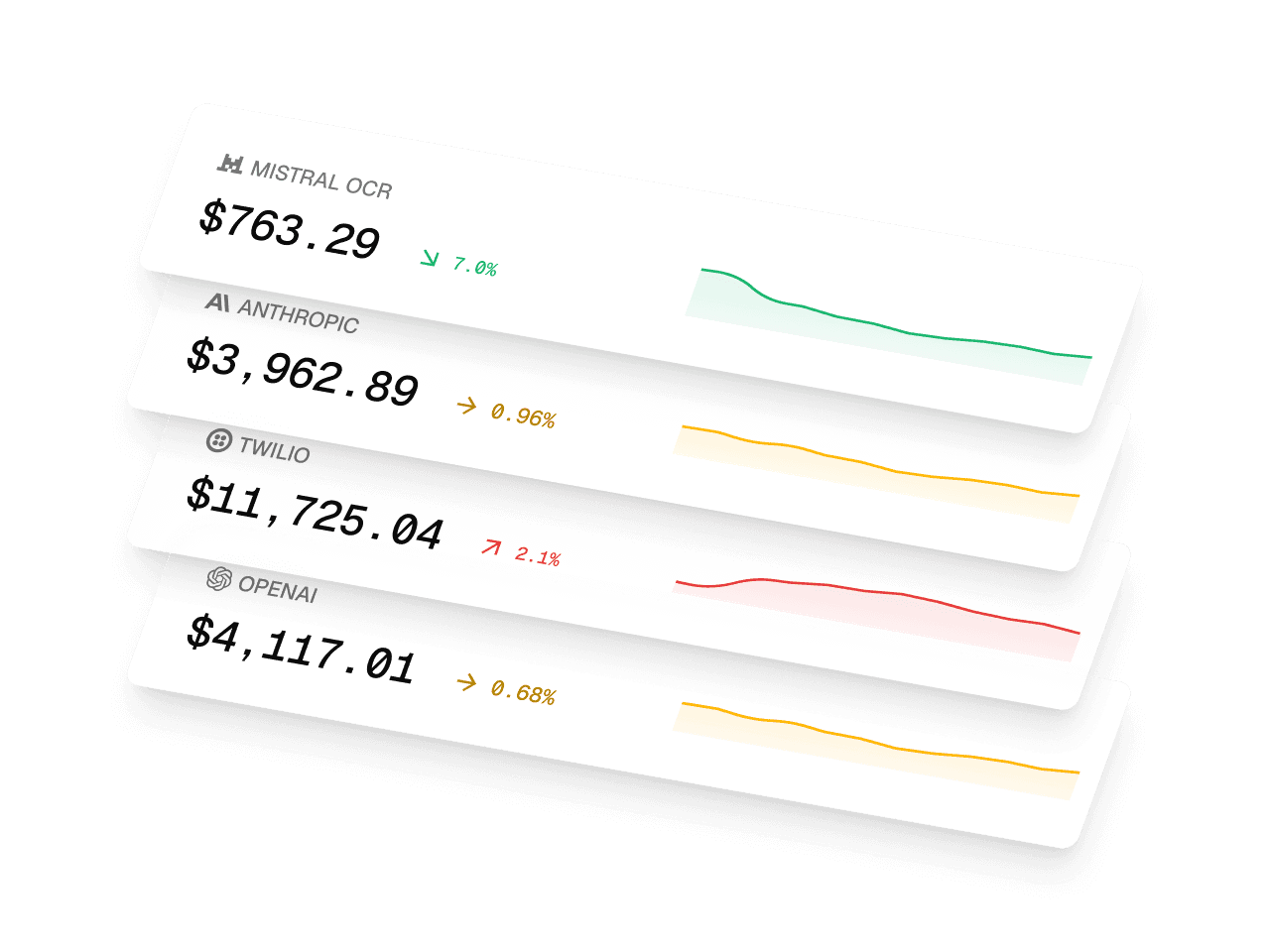

At Paid, we solve billing and cost tracking for AI agents. But we kept hearing the same problem before companies even got to pricing.

Founders would tell us they couldn't figure out how to charge for agents. Then we'd look at their contracts. They were trying to price outcome-based work using terms written for seat-based software.

"Most AI agent companies are still using SaaS contract language which makes no sense. Your software isn't just sitting there helping someone fill out a form. It's booking meetings, writing code, making decisions. When something goes wrong, who's liable? The standard contracts don't answer that. We kept hearing this from founders, so when GitLaw said they were building an agent-specific MSA, we jumped in. Builders need legal frameworks that match what their agents actually do".Manny Medina, Paid CEO

You can't bill for outcomes if your contract only covers usage. You can't price based on value delivered if your liability framework assumes predictable, passive behavior. You can't protect your margins when the legal foundation doesn't match what your product does.

The contract shapes everything that comes after. Get it wrong and your entire business model sits on shaky ground.

How to use the Agentic MSA

The MSA is open source and free to use. You can access it directly in the GitLaw Community or ask the GitLaw AI Agent to generate a customized version for your specific needs.

Because the law around AI agents is evolving rapidly, treat this as a starting point, not a substitute for legal advice. Work with a commercial lawyer to customize it for your situation.

The template uses CommonPaper's Software Licensing Agreement and AI Addendum as a foundation, adapted for the unique characteristics of AI agents.

Nick and the GitLaw team built this based on patterns from reviewing hundreds of agent contracts. We contributed our research from working with dozens of agent companies on monetization challenges.

Together, we're building the infrastructure the agent economy needs. Legal frameworks that match how agents actually work. Billing systems that align pricing with value delivery. Cost tracking that protects margins.

Because agents aren't just another SaaS feature. They're a fundamentally different product category that needs different infrastructure.

What happens next

Legal frameworks always lag behind technology. Right now that lag creates real risk for anyone building agents.

You can ignore it and hope nothing breaks. Or you can use contracts built for what agents actually do, not what software did ten years ago.

Most founders choose hope. The ones who survive choose better infrastructure - and you'll soon find these MSAs baked into Paid's offering too.

→ Read the announcement on GitLaw

→ Listen to our conversation with Nick about building legal infrastructure for the agent economy.

Stay ahead of AI pricing trends

Get weekly insights on AI monetization, cost optimization, and billing strategies.

Monetize AI Without the Headache

The billing platform built for AI companies. Launch pricing models, track costs, and optimize margins—no engineering lift.

- Track AI costs by model & customer

- Launch usage-based pricing fast

- Know your margin on every deal

- Integrate in minutes